how Could we use AI more efficiently?

LLMs, such as OpenAI’s ChatGPT, Google’s Gemini and Meta’s Llama have reshaped our interaction with technology, enabling generation of realistic texts, image creation, and answering complex queries. However, their rise comes with increasing environmental costs. In this blog post, we’ll explore these environmental challenges and offer some practical recommendations for mitigating LLMs environmental footprint.

Energy Consumption of LLMs

All these factors contribute to a significant carbon footprint compared to the value delivered.

Practical Advice for Using LLM’s Responsibly

A few suggestions from Oxide AI’s team how we can all help to reduce the environmental impact from modern AI.

1. Prioritize Complex Tasks: Use LLMs for challenging problems, such as understanding query intent, resolving ambiguities, and complex information extraction. Simple tasks can often be solved by much less demanding AI technologies, especially for high-volume problems.

2. Use Smaller Models: Avoid overusing LLMs for well-defined tasks. Fine-tune smaller, specialized models for domain-specific tasks. Explore vendors offering robust small models, such as

3. Implement Caching: For frequent, similar requests, use a cache or simpler AI to recognize and retrieve precomputed results, reducing redundant processing and saving energy.

4. Optimize Database Interactions: For tasks involving heavy database interactions (e.g., document stores), consider architectural improvements. Utilize Retrieval Augmented Generation (RAG) models or embedding + vector database lookups to reduce GPU-intensive processing. Alternatively, use LLMs to translate natural language requests into database queries for efficient processing with much lower energy impact.

5. Minimize Number of Interaction Cycles: Make smart prompts with necessary context information in a single request. LLMs can efficiently tackle complex tasks with high-quality prompts and relevant context. Avoid approaches with brute-force solutions sampling from LLMs.

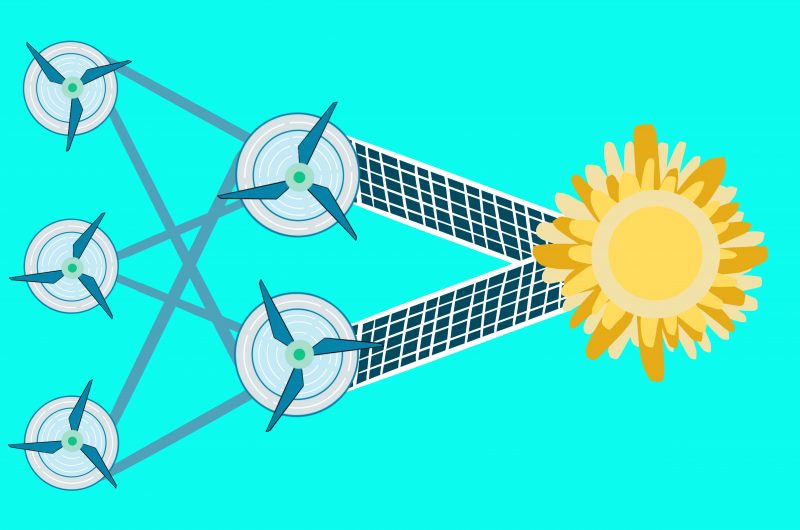

6. Hierarchical Models: Try Mixture of Experts (MoE) models that assign tasks to specialized sub-models (“experts”), reducing energy use by activating only parts of LLMs. Another possibility is using a hierarchy of smaller language models.